I’ll be doing a series on using OpenCV and Tesseract to take a scanned image of an equation and be able to read it in and graph it and give related data. I was surprised at how well the results turned out =)

I will be using versions OpenCV 2.4.2 and Tesseract OCR 3.02.02.

I have also made two tutorials on installing Teseract and OpenCV for Vista x86 on Microsoft Visual Studio 2008 Express. However, you can go on the official sites for official documentation on installing the libraries on your system.

Parts

Equation OCR Part 1: Using contours to extract characters in OpenCV

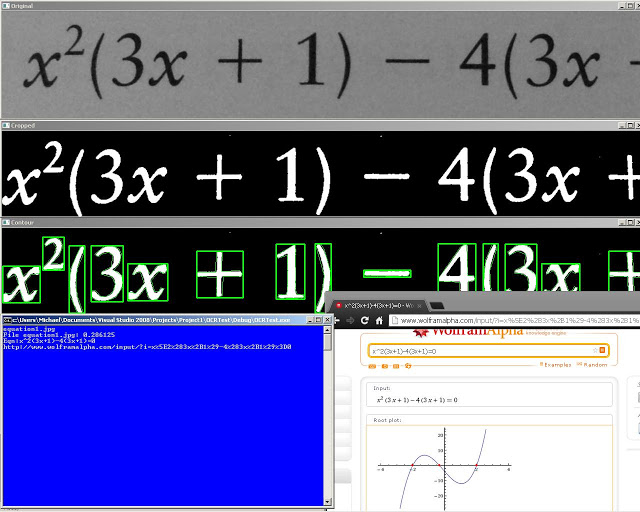

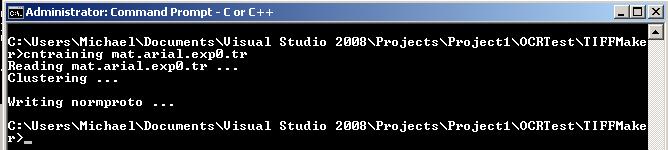

Equation OCR Part 2: Training characters with Tesseract OCR

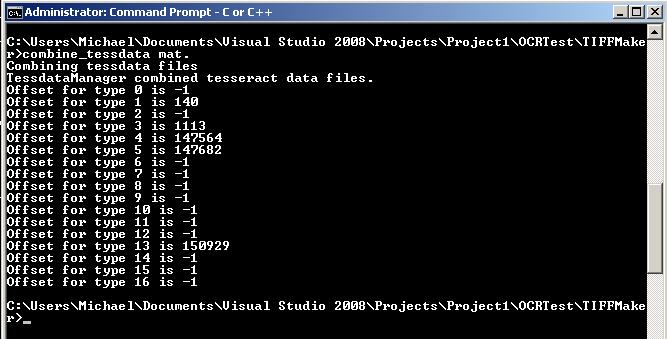

Equation OCR Part 3: Equation OCR

Tutorials

Extraction:

Now we can break down extraction to even more steps: preprocessing and contour analysis.

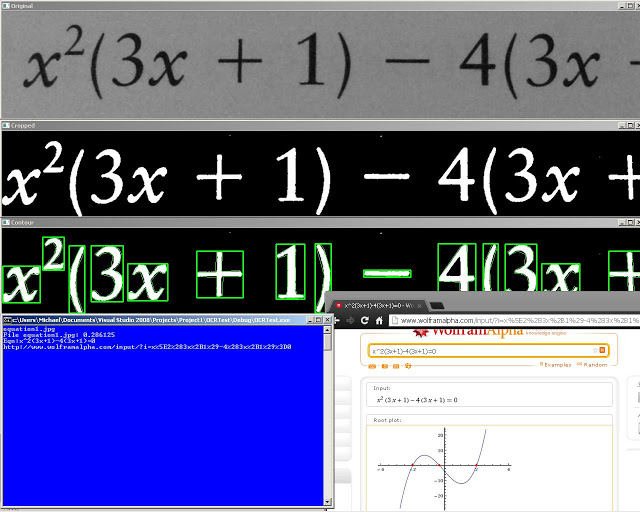

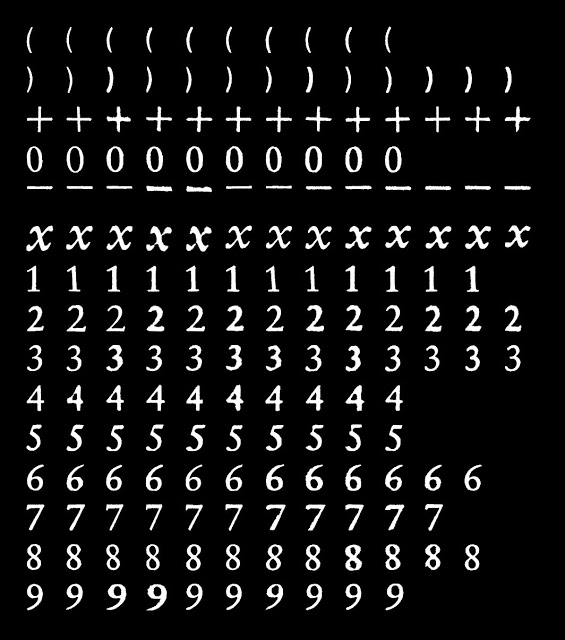

Preprocessing

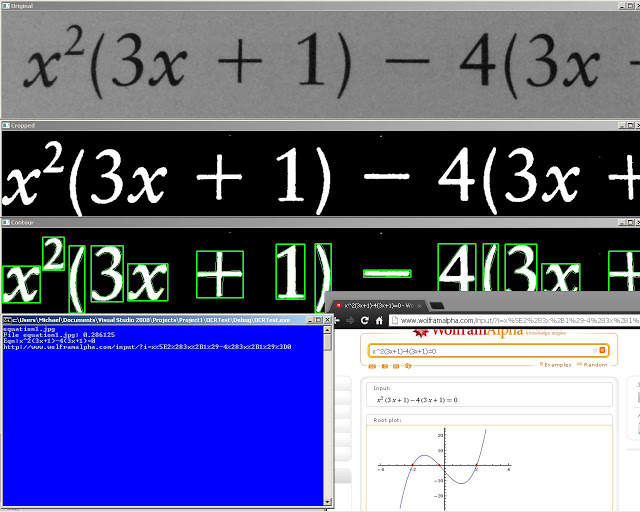

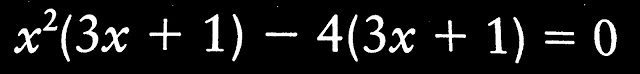

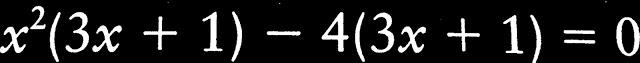

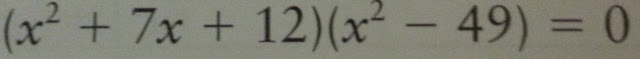

The first step of preprocessing is to smooth out the image and make it a binary image (black or white) for contour analysis. This is our original image:

cv::Mat img = cv::imread(“equation1.jpg”, 0);

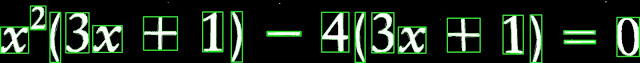

We first apply a Gaussian blur to smooth out the image. We then use adaptive thresholding to binarize the image (make it black or white) and we then invert the colours since OpenCV uses black as the background and white as the objects.

cv::Size size(3,3);

cv::GaussianBlur(img,img,size,0);

adaptiveThreshold(img, img,255,CV_ADAPTIVE_THRESH_MEAN_C, CV_THRESH_BINARY,75,10);

cv::bitwise_not(img, img);

Next we have the fix the angle of the text. In this case the offset angle isn’t bad maybe plusminus 1 or 2 degrees, but in other cases where the angle is greater we will need to fix the angle. We can do this by finding the minimum bounding box around the line of text. This method is better for straight linear text, however I later discovered that when you have the variable y or have large brackets or when the expression is very short this method fails and the smallest area rectangle will have a large rotation. It suits our purpose thought for long equations. There is probably a better way to do this with Hu moments but this will suffice. I took this method from another blog:

http://felix.abecassis.me/2011/10/opencv-rotation-deskewing/

Now we have this rotated box around our aligned text we can just make that box our new bounding box.

Contour Extraction

Next we can use OpenCV’s contour function to detect and find all the “blobs” or shapes. I also check if any of the shapes are greater than a certain area because if the shape is very small then it is probably junk. Another issue is that some characters like the equal sign “=” contain two shapes but this can easily be fixed. We can check if two shapes are on top of another if the x coordinates of their centres are within a certain threshold. Then, we can combine the shapes and make a new contour out of it.

cv:: findContours( cropped, contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_TC89_KCOS, Point(0, 0) );

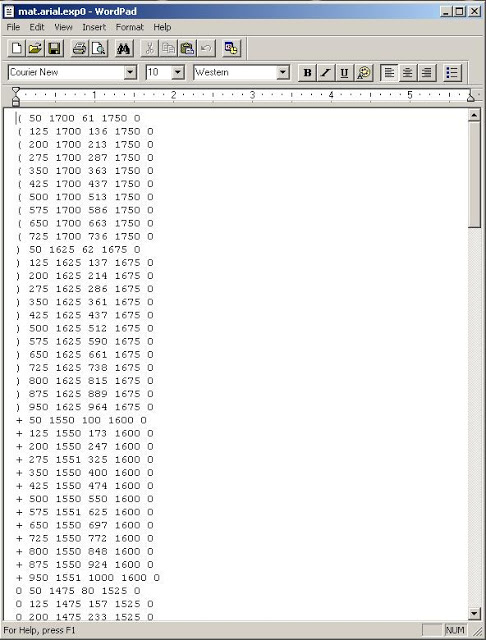

Now that we have found all our contours all we need to do is extract each contour and save them. We can take the bounding rectangle of each contour and cut that part out of the original image. However, there are some cases where the bounding rectangle will take part of another shape. To prevent this, we can use a “mask” or basically a filter to copy from the bounding rectangle only pixels within the contour.

Mat mask = Mat::zeros(image.size(), CV_8UC1);

drawContours(mask, contours_poly, i, Scalar(255), CV_FILLED);

Mat extractPic;

image.copyTo(extractPic,mask);

Mat resizedPic = extractPic(r);

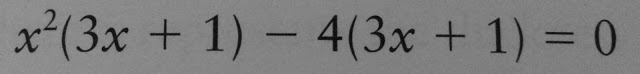

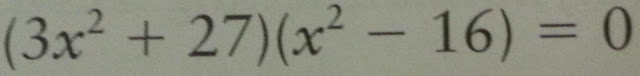

Here are some sample equations you can use:

Source Code

Source code is available here: http://pastebin.com/Q2x8kHmG